Major Computer Systems at Clemson University

updated February 13, 2026

Corrections are welcome!

The purpose of this page is to provide a list of the major

computer systems that have been installed at locations

owned or operated by Clemson University over the years.

This list does not include the numerous personal computers

and workstations that became common at Clemson University

from the 1980s onwards unless they were organized into a

cluster. Note that many of the clusters listed below were

upgraded over the years, and what appears below is typically

the initial configuration.

This list omits the large secondary storage systems

installed at Clemson University over the years, including

a StorageTek STC 4305 early solid-state disk in 1980,

a StorageTek STK 4400 Library Storage Module for automatic tape

cartridge handling in 1988, and the Indigo Data Lake in 2024,

and the many special purpose I/O

devices acquired over the years, including large plotters

and numerous head-mounted displays for virtual reality.

It is noteworthy that in 2008 Clemson University had two

computer systems appear in the

TOP500 supercomputers in the world list:

the Palmetto cluster at #61 and the CCMS cluster at #100.

In addition to the university-wide Computer Center systems,

many colleges, departments, and research centers have their

own computers and labs. There are multiple reasons for this

decentralized structure, including:

- alternate operating systems and software packages

to support curriculum and research

- special purpose hardware and software for research

- special security and access privileges for faculty and

students to support research

- directed gifts to that academic unit

The College of Agriculture and the College of Engineering,

and the Department of Chemical Engineering, the Department of Computer

Science, and the Department of Electrical and Computer Engineering

were early users of minicomputers, microcomputers, and workstations

as well as software that differed from what the Computer Center

supported. This started in some cases as early as the 1960s. By the

1990s, many additional academic units and research centers had their

own computer systems and labs.

It is also noteworthy that Walt Ligon's Parallel Architecture Research

Lab in the Department of Electrical and Computer Engineering not

only built multiple Beowulf cluster computing systems in the 1990s

and 2000s, including the Minigrid cluster that became part of Palmetto,

but also between 1999 and 2003 PARL hosted the Beowulf Underground,

which was an important early source of information for research

groups around the world on how to build and use relatively

inexpensive Beowulf clusters.

Major Sections

This page is one of a series of timelines and highlights

about the history of computing at Clemson University:

Note on DCIT/CCIT acronyms: the Clemson University Computer Center

became part of the Division of Computing and Information Technology

(DCIT) in 1985, which was renamed as Clemson Computing and Information

Technology (CCIT) in 2007.

Computer Center

Link to references and further discussion

about the Computer Center in general

First Computer

- RPC 4000, 8,008-word magnetic drum memory, installed 1961

Sarah Skelton and Merrill Palmer at RPC 4000.

Photo courtesy of Don Fraser.

- References and further discussion

Mainframes

- IBM S/360 Model 40, 64 KiB magnetic core memory, 1966

- memory upgrade to 128 KiB core memory in 1967

- memory upgrade to 256 KiB core memory, 1968

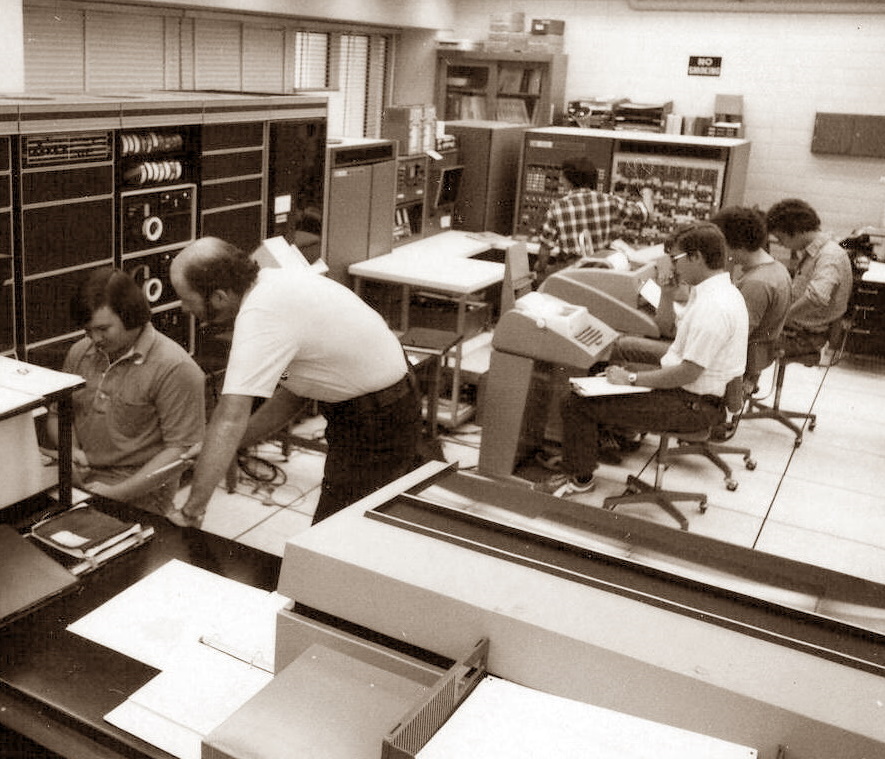

George Alexander at the Model 40 console.

Photo from 1970-1971 Student Handbook.

- IBM S/360 Model 50, 512 KiB core memory, 1970

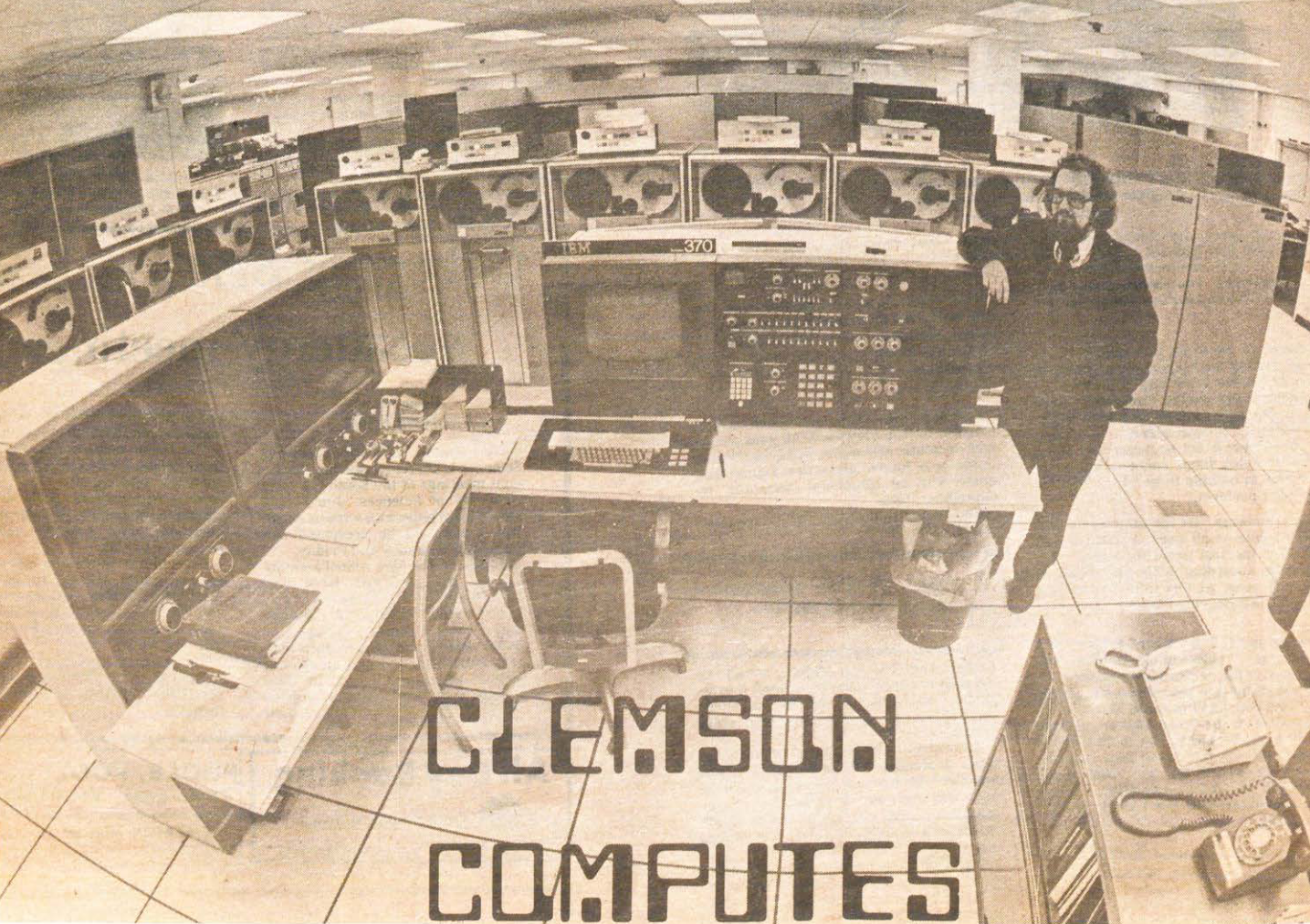

- IBM S/370 Model 155, 1 MiB core memory, 1972

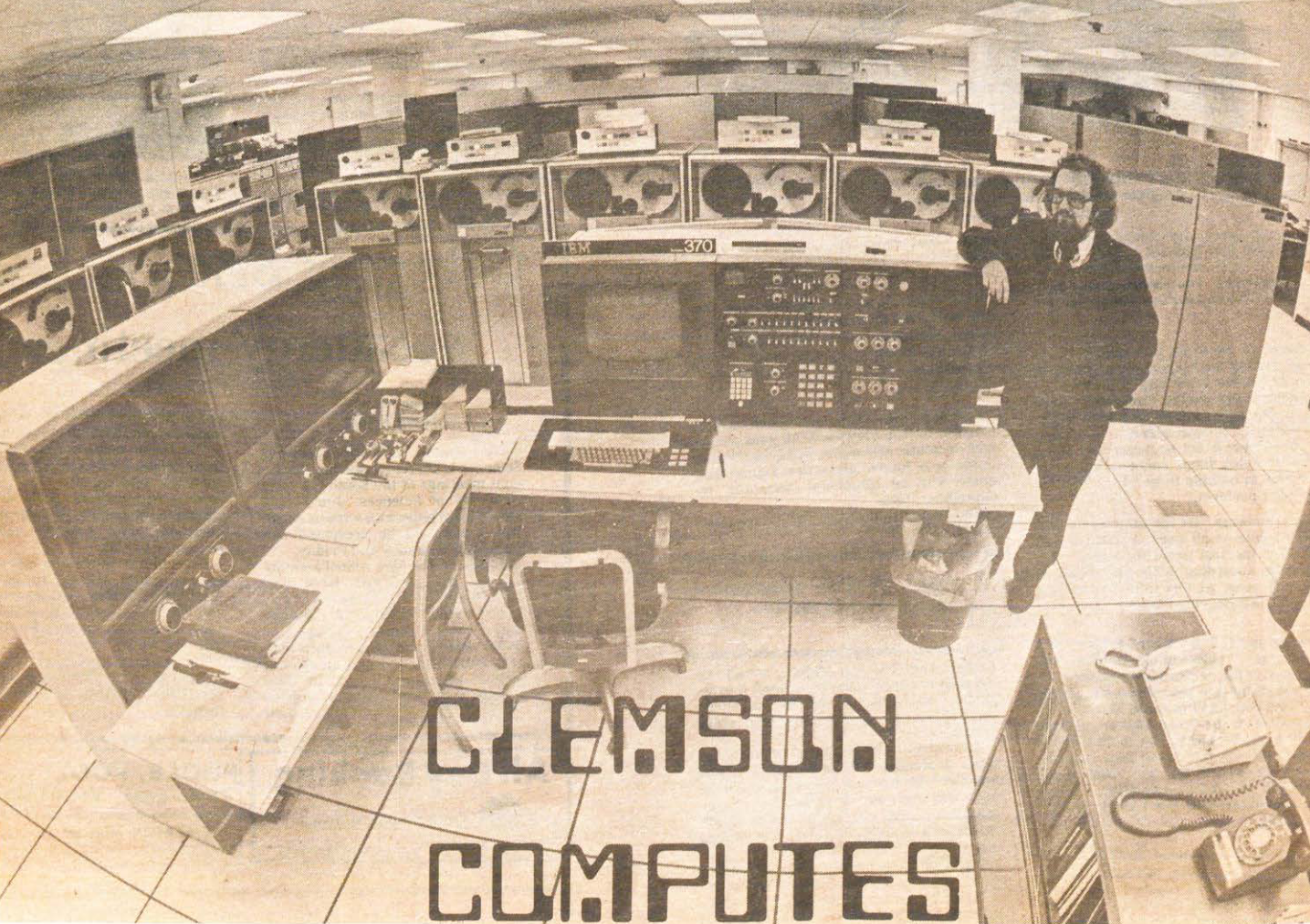

Matt Laiewski at the Model 155 console.

Photo from clipping of Anderson

Independent, December 15, 1972, in

Series_0037_Computer Center 1970s_A.

- IBM S/370 Model 158, 1.5 MiB core memory, 1974

- later memory upgrade to 3 MiB core memory

Doug Dawson at the Model 158 console.

Photo from 1976 TAPS yearbook.

- IBM S/370 Model 165-II, 4 MiB core memory, 1976

- later memory upgrade to 8 MiB core memory

Page Hite, Jr., standing beside the 165-II console.

Photo from The Tiger, March 31, 1978.

- IBM 3033, 8 MiB semiconductor memory, 1979

YouTube video,

"Clemson Computer Center Tour, 1980,"

including a line printer playing the Tiger Rag at minute 9:30 of the video

- memory upgrade to 12 MiB memory, 1981

- IBM 3081-K, 24 MiB memory, 1982

- later memory upgrade to 32 MiB memory

- NAS AS/XL-60, 128 MiB memory, 1986

AS/XL-60 when being installed.

Photo courtesy of Clemson University Libraries

Special Collections and Archives.

- vector processor upgrade to NAS AS/VXL-60, 1987

- Note that Hitachi bought NAS in 1989, so NAS systems then

became HDS systems.

- HDS AS/EX-80, 128 MiB memory, 1990

- extra processor upgrades to HDS AS/EX-90 then

HDS AS/EX-100, 1994

Photos from computing@clemson.edu

newsletter, vol. 3, no. 3, Spring 1998.

- HDS Pilot 25, 1 GiB central memory,

215 GiB expanded memory, 1997

- extra processor upgrade to HDS Pilot 35, 1999

- reversion to HDS Pilot 25, 2000

- back to HDS Pilot 35, 2002

- IBM z800, 8 GiB memory, 2002

Photo from computing@clemson.edu newsletter,

vol. 8, no. 2, Winter 2002-2003.

- IBM z10 Business Class, 2009

- ca. 2015 the final enterprise mainframe applications for the

university were converted to or replaced by software packages that

run on other platforms.

At this point, the mainframe runs only SC DHHS applications.

- IBM z14, 2019

- References and further discussion about

the mainframe computers

VAX and Sun Servers

- Clemson University acquired two VAX-11/780 computers in 1982,

one in January and the second in July. They were first located in

Poole Hall, awaiting the renovation of Room 10 in the basement of

Riggs Hall, called the "Graphics and Research Center".

By fall 1983 both were in Riggs and had been assigned the system

names Grafix and Eureka, to match their intended usage. Later more

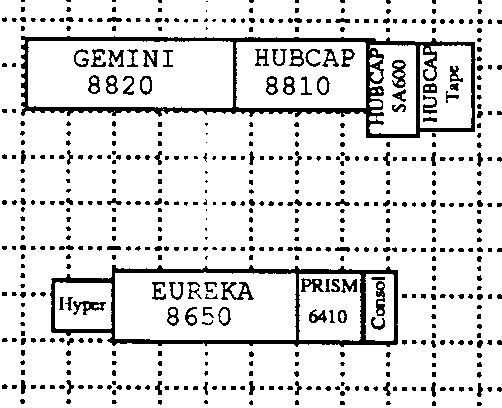

VAXes were added, and additional system names, such as Prism, were used.

System names were retained as older VAXes were retired and newer

ones replaced them; however, in one case in 1993 two existing VAXes,

Eureka and Gemini, switched names so that the more capable VAX 8820

could take over the name and role of Eureka to better meet user demands.

Gair Williams in Riggs Room 10, ca. 1983.

One of the VAX 11/780 systems is on the left wall,

behind the row of disk drives, and the other is on

the back right wall, behind an air conditioning unit.

Photo courtesy of Don Fraser.

-

Note: Clemson University had acquired some PDP-11s for the Computer Center

and later some microVAX II systems for Housing, University Relations,

and other groups; however, I am not cataloging these smaller machines.

- Eureka

- DEC VAX-11/780, 6 MiB, running VMS (primarily for research)

- DEC VAX 8600, 12 MiB, 1986

- DEC VAX 8650, 32 MiB, 1990

- DEC VAX 8820, 128 MiB, 1993

- retired 1994

- Grafix

- DEC VAX-11/780, 6 MiB, running VMS (for graphics applications,

with graphics workstations attached)

- retired 1986

- Prism

- DEC VAX-11/780, 8 MiB, running VMS, 1986 (for email?)

- DEC VAX 8650, 32 MiB, 1986

- DEC VAX 6000-410, 64 MiB, 1988

- became one of the two paired CLUST1 machines of the VAXcluster, 1990

- DEC VAX 6000-610, 256 MiB, running OpenVMS, 1994

- was scheduled to be decommissioned in summer 1997

- hubcap

- DEC VAX-11/750, 6 MiB, running Ultrix, 1985

- DEC VAX-11/780, 8 MiB, running Ultrix, 1986

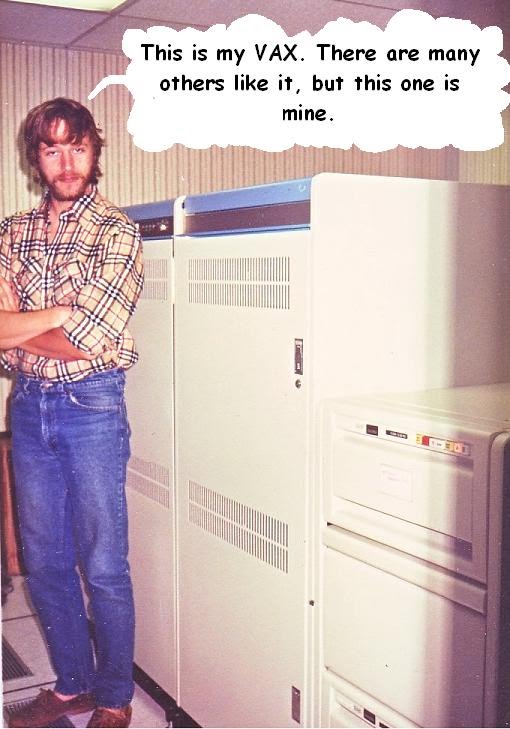

Mike Marshall standing

beside hubcap in 1987.

Photo courtesy of Mike Marshall.

- DEC VAX 8810, 112 MiB, running ULTRIX, 1988

- DEC 3000 model 500, 96 MiB, running OSF/1, 1994

- DEC Alpha 2100 4/275, running Digital UNIX, 1996

- Sun HPC 3000, running Solaris 2.6 (SunOS 5.6), 1998 to at least 2005

- 1.5 GiB of main memory, six 275 MHz processors, and a

refrigerator-sized RAID disk subsystem containing more

than 135 GB of disk storage [Condrey, et al., 1998]

- CUFAN (Clemson University Forestry and Agriculture Network)

- running on Eureka, DEC VAX 8600, 12 MiB, 1986

- running on Eureka, DEC VAX 8650, 48 MiB, 1990

- funded by College of Agriculture

- see

more information on the CUFAN network and videotex system

- CC

- DEC VAX-11/750 running VMS, 1986

- this may have been a machine that was used to develop

the videotex system as well as some research on multi-vendor

office automation interoperability

- DISD

- DEC VAX-11/750 running VMS, 1986

- used to develop administrative software for

many of the SC technical colleges

- Gemini

- DEC VAX 8820 dual processor, 128 MiB, 1988

- became one of the two paired CLUST1 machines of the VAXcluster, 1990

- DEC VAX 8650, 32 MiB, 1993

- retired 1994

- VAXcluster with shared disk pool

- started with the two 11/780 computers

- ca. 1985: three 11/780s

- ca. 1986: 8600 (Eureka), 8650 (Prism)

- ca. 1988: 8600, 8650, 8820

- ca. 1992: 8650, 8820, and 6000-410

- DEC VAXstation 4000-90 ("BATCH1") added to cluster in 1993

- Sun SPARCserver 1000, 1994

- a production system platform used for hosting the Clemson University

Data Warehouse and for gaining further experience in migrating

several online transaction processing applications from the

mainframe environment to a client/server environment

- Helios

- Sun HPC 6000, research-only, 1998

- 4 GiB of main memory, sixteen 275 MHz processors, and a

RAID subsystem similar to hubcap [Condrey, et al., 1998]

- computing@clemson.edu newsletter vol. 4 , no. 1, says the

processors were initially 250 MHz and funding was committed

to upgrade to 336 MHz each

- mail servers after hubcap

- Sun Ultra Enterprise 3000, 1996

- four 248 MHz processors, 1 GiB memory

- Storage Works RAID Array 410 with a 24 GB RAID-5 RAIDset

- Sun Sunfire V880, 2003

- eight 750 MHz UltraSPARC III processors, 32 GiB memory

- T3 Fiber storage array

- References and further discussion about

the VAX and Sun servers

Condor

- Condor was a distributed system designed at the University of

Wisconsin in the mid-1980s to achieve high throughput computing

by scavenging unused cycles on otherwise idle computers. Starting

in 2007, Sebastien Goasguen led a team at Clemson University

to deploy Condor on the CCIT lab computers.

- References and further discussion

Palmetto

-

initial description in October 2007:

A "condo cluster" with 20 TF peak performance using 256 nodes;

8 cores per node; 12 GB/node; and 80 GB disk/node. This system

also contains 120 TB of large-scale, high performance disk

storage and is housed at Clemson's Information Technology Center.

- Palmetto was listed in November 2008 as the 61st fastest

supercomputer in the world in the

TOP500 list.

- As the cluster grew, faster nodes and faster interconnect

were added in phases. This type of cluster is termed as "heterogeneous",

and you can see changes in the system characteristics over the years

at the

Clemson University entry at top500.org.

Promotional photo, not dated.

-

CCIT video of Palmetto for Supercomputing 2020,

which documents the early phases of Palmetto

-

CCIT time lapse video of installing 65 new nodes in Palmetto, 2024

- A brief history of Palmetto and a discussion of the

transition to Palmetto 2 are given in Asher Antao, et al.,

"Modernizing Clemson University's Palmetto Cluster: Lessons

Learned from 17 Years of HPC Administration,"

Practice & Experience in Advanced Research Computing (PEARC)

Conference, article 14, 2024, pp. 1-9.

Figure from the Antao, et al., paper.

- References and further discussion

Palmetto 2

- In 2024, CCIT introduced Palmetto 2, a "second iteration" of

Palmetto. See

Bailey Troutman,

"Palmetto 2 Goes Live to Elevate Clemson Research and Computing,"

Clemson News, May 13, 2024.

- As of January 2026, Palmetto 2 contains:

- 1,201 compute nodes, totaling 54,636 cores

- 21 large-memory nodes with over 1.5 TB of RAM each

- 3 extra-large-memory nodes with over 3 TB of RAM each

- 10 and 25 Gbps Ethernet networks

- 56, 100, 200, and 400 Gbps Infiniband networks

- benchmarked at 3.0 PFlops

- See the current description

here.

- References and further discussion

Cypress

-

a dedicated Hadoop environment integrated with Palmetto

-

3.64 PB (petabyte) global Hadoop Distributed File System (HDFS)

-

40 worker nodes (responsible for computation and data storage)

-

256 GiB of RAM per node

-

16 nodes each have 12 1-TB local disks

-

24 nodes each have 24 6-TB local disks

-

one dedicated Cypress Cluster user node for job submission

and data staging

Lakeside

- A research cluster that was built for a partnership with Dell.

This partnership opportunity, however, did not materialize, and the

cluster was later merged into Palmetto.

- 1 head node Dell PowerEdge R620 with 2 x Intel Xeon E5-2665

@ 2.4 GHz CPUs / 32 GiB RAM

- 1 scheduler node Dell PowerEdge R620 with 2 x Intel Xeon E5-2665

@ 2.4 GHz CPUs / 32 GiB RAM

- 1 login user node Dell PowerEdge R620 with 2 x Intel Xeon E5-2665

@ 2.4 GHz CPUs / 32 GiB RAM

- 88 Dell PowerEdge C6220 compute nodes with 2 x Intel Xeon E5-2665

@ 2.4 GHz CPUs / 64 GiB RAM

- 8 Dell PowerEdge R720 GPU Compute nodes with 2 x Intel Xeon E5-2665

@ 2.4 GHz / 64 GiB RAM / 2 x Nvidia M2070 GPUs

- 40G QLogic Infiniband interconnect

CloudLab

- CloudLab is an NSF-funded testbed to allow

researchers to build distributed computer systems along with

administrative privileges for research experiments in cloud computing.

Clemson has been involved and providing hardware resources to the project

since 2014 under the leadership of K.C. Wang.

- References and further discussion

Current Facilities

College of Engineering

- Engineering Computer Laboratory

- Engineering Sun Network / Computer and Network Services (CNS)

- several Sun servers starting in the 1980's, including omni,

which in 1989 was a Sun 4/280

- a dedicated system staff supported the numerous workstations,

servers, personal computers, and laptops within the college

Chemical Engineering

- Process Control Computer Laboratory

- EAI TR-48/DES-30 analog/hybrid computer, 1968 or earlier

- GE 312 process control computer, 1968

Robert Edwards (University President),

Duane Bruley, and Charles Littlejohn, Jr.,

with the EAI system against the back wall on

the left side and the GE 312 on the right.

Photo from The Tiger, August 30, 1968.

- References and further discussion

Electrical and Computer Engineering

- DEC CLASSIC 8, 1966

- a 1975 equipment list included the CLASSIC 8 and the two College of

Engineering systems listed above, plus:

- DEC PDP-8 with laboratory hardware interface

(for a gas chromatograph or sampling oscilloscope)

- DEC PDP-8/EAI TR-48 Hybrid

- DEC PDP-11 running RSTS

- DEC PDP-16

- INTEL INTELLEC-8/Mod 80 microcomputer development platform

- ECE Graduate Computer Laboratory

- established by Jim Leathrum

- Harris 800, 512 KiB, 1983

- Parallel Architecture Research Lab (PARL)

- established by Walt Ligon

- Clemson Dedicated Cluster Parallel Computer, ca. 1996

- 12 DEC Alpha CPUs at speeds ranging from 175-300Mhz

- connected by a fiber optic crossbar switch

Photo courtesy of Walt Ligon.

- Grendel, also known as the Clemson Beowulf Cluster, ca. 1997

- 16 150MHz Pentium processors with 64 MiB of RAM and 2 GB of

disk each

- an additional processor used as the system host

- two switched 100 Mbps fast ethernet networks

- a bus network using a stack of AsanteFast 100 Hubs

- a full-duplex switched network using a Bay Networks 28115/ADV

fast Ethernet switch

Photo courtesy of Walt Ligon.

- 5-node Travelwulf, ca. 2000

- each node could fit in a suitcase and then be assembled

into a cluster at a travel destination

Photo capture from PARL

page on Internet Archive.

- 8-node Webwulf, ca. 2000

Photo capture from PARL

page on Internet Archive.

- 10-node Aetherwulf, ca. 2002

- description from an

archived PARL web page:

Aetherwulf is a small rack-mountable Beowulf cluster made from

10 single board computers. Each node consists of a 1GHz Pentium

3 processor, 30GB IBM Travelstar laptop drive, 256MB ram, and

build in NIC and video. The case was designed to be clear for

demonstration purposes.

Nathan DeBardeleben and Aetherwulf.

Photo capture from PARL

page on Internet Archive.

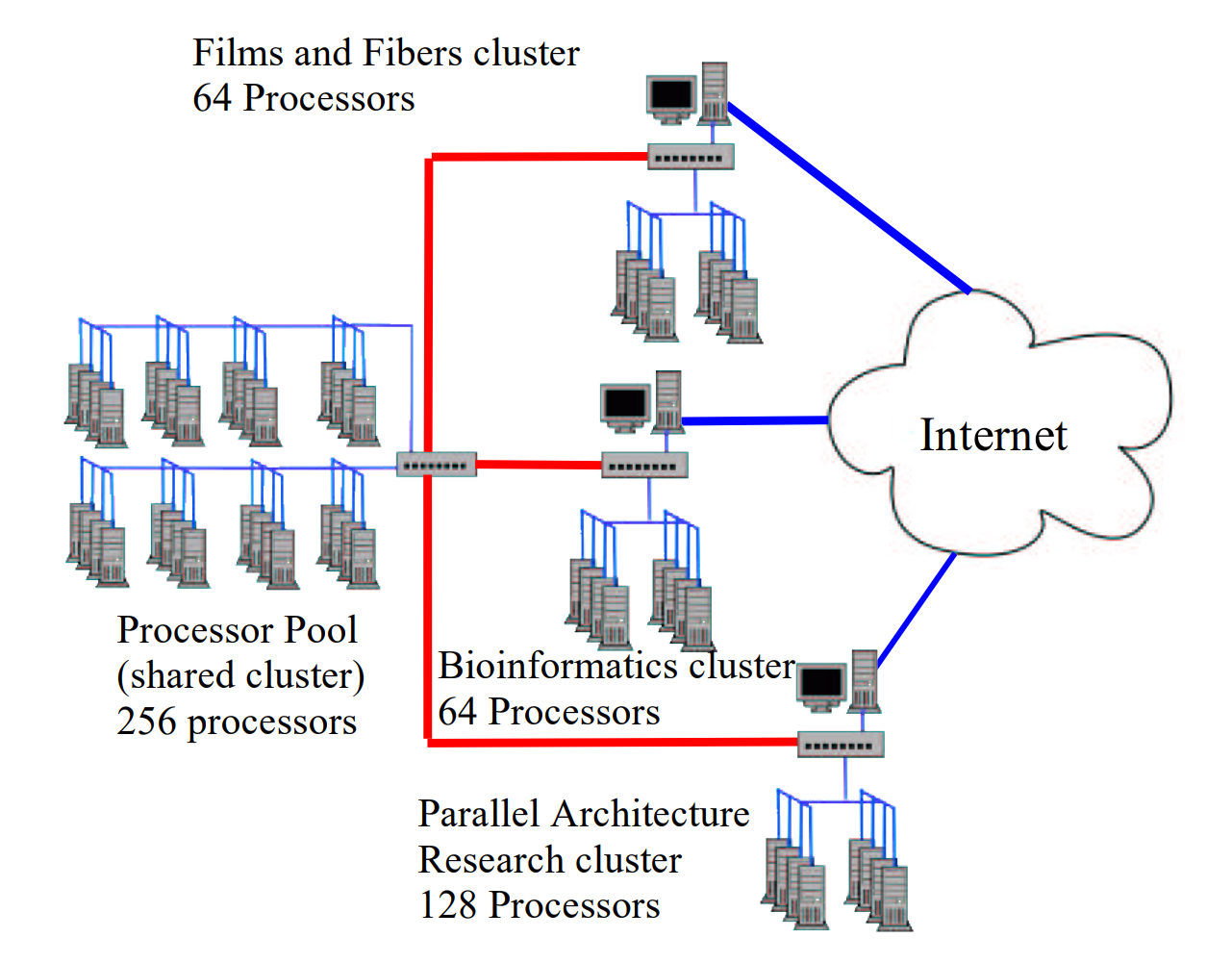

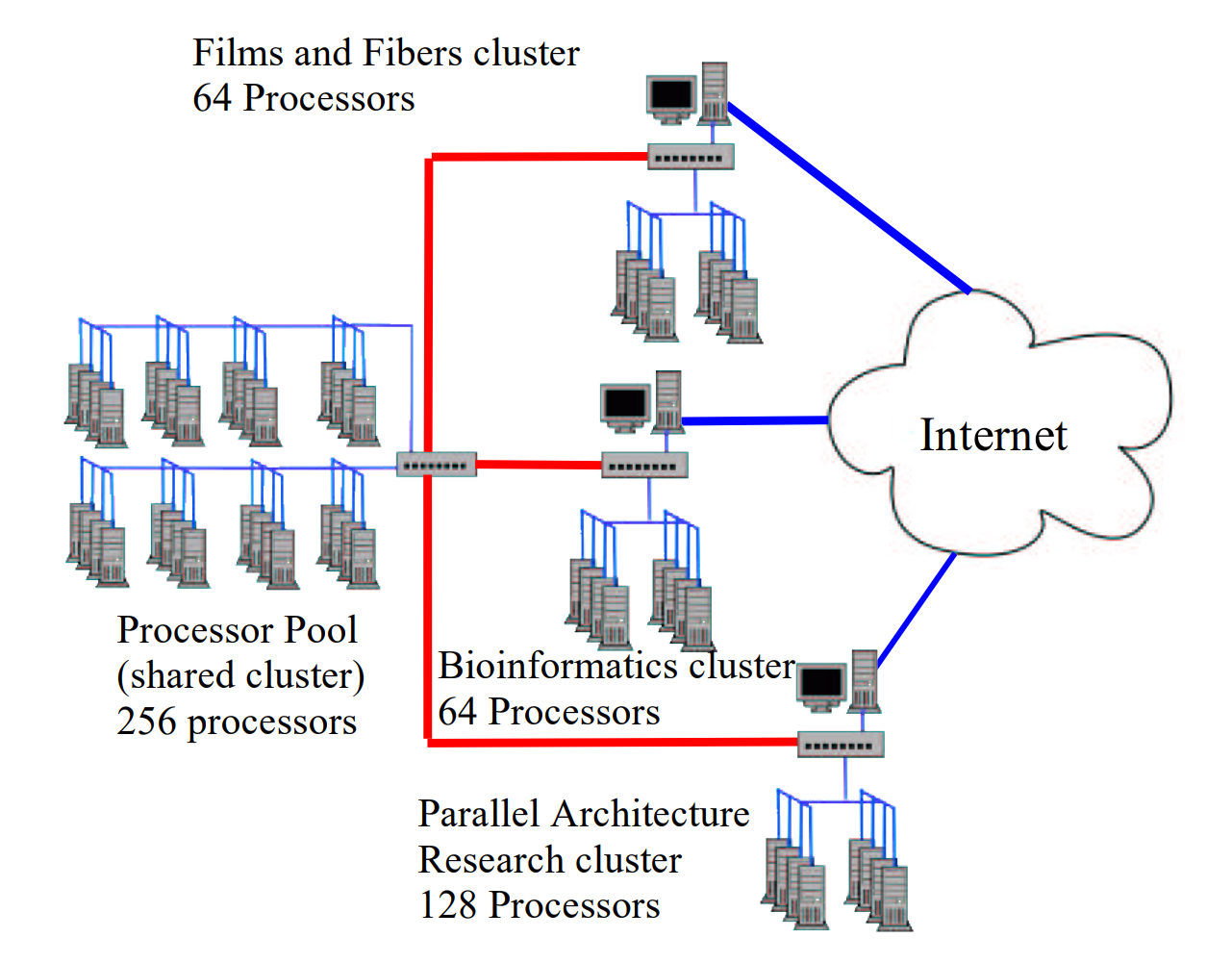

- Clemson Computational Minigrid Supercomputing Facility

- description and diagram from

"Computational Mini-Grid Research at Clemson University,"

in November 2002:

At first glance, the mini-grid may appear to be distinguished

from a conventional computational grid only in scope. A mini-grid

is limited to a campus-wide setting, while a conventional grid in

national or global in scope. However, a mini-grid also has two

distinctive architectural features:

- The grids are composed entirely of Beowulf Clusters

- The internal networks of the client clusters are

interconnected through dedicated links.

These features add an additional layer of complexity in producing

software for these grids. Resources may be shared through the

private network links, so an understanding of some grid services

must be integrated into the system software at the node level of

the client clusters, rather than simply at the head of the cluster.

However, this arrangement provides two primary advantages:

- Finer granularity control of resources within the grid

- Predictable, reliable bandwidth between grid resources

(as opposed to internet connected grids).

The additional granularity of control comes from the fact that

is possible to allocate resources to a particular job on a per

node basis, rather than having to allocate entire clusters or

supercomputers connected to the grid to a single task. In the

Clemson mini-grid, we have developed the facility to "loan" nodes

between clusters, allowing for jobs to span multiple clusters.

- Minigrid racks in PARL

Photo capture from PARL

page on Internet Archive.

- Wireless Communications and Networks research group

- Mike Pursley, Carl Baum, Dan Noneaker, and Harlan Russell

- Holcombe Laboratory for Digital Communications

- "Sun SPARC computational server with 20 processors," rack mounted in

EIB, ca. 2000

- WCNITC cluster, 2007

- one of the first systems housed in CCIT's Palmetto condominium cluster

operations at the ITC

- 1 head node Dell PowerEdge 1950 w/ 2x Intel Woodcrest @ 2.66 GHz CPUs

/ 4 GiB RAM

- 68 compute nodes Dell PowerEdge 1950 w/ 2 x Intel Woodcrest

@ 2.66 GHz CPUs / 8 GiB RAM

- 1 spare Dell PowerEdge 1950 node

- Ethernet 1G interconnect

- ECE references and further discussion in general

- PARL references and further discussion

Chemistry

Computer Science (later School of Computing)

- Test and Evaluation Community Network (TECNET)

- led by Ed Page, Wayne Madison, and Harold Grossman

- four NCR Tower 1632 computers, 1983

- Gould PowerNode 9005, 1988

- departmental Unix machines for research

- all were acquired as partial or full donations based on the efforts

of Robert Geist

- Perkin Elmer 3230, 1984

- AT&T 3B2/300, 1984

- Concurrent (Masscomp) 6700 (4 processors), 1989

- AT&T 3B2/1000 (4 processors), 1989

- Sun 4/330 Workstation, 1990

- multiple systems from Data General Corporation, including

an AViiON 5120 Dual Processor Server and HADA disk array in 1993

- research efforts led by Roy Pargas

- Intel Personal SuperComputer iPSC/2, 1988

- departmental Unix machines and PCs for instruction and research

- several Sun servers starting in the 1980's, including citron,

which in 1989 was a Sun 4/280

- a dedicated system staff supported the numerous workstations,

servers, personal computers, and laptops within the department

and later the School of Computing

- research efforts led by Robert Geist and Mike Westall

- IBM eServer BladeCenter with NetApp NAS, 2004

- initially at most 28 blades, with eventually additional racks

amd three generations of blade servers

- e.g., ca. 2010 there were at least six IBM 8853-AC1 dual-Xeon blades

[as described in Zachary Jones' dissertation]

- Digital Production Arts

- led by Jerry Tessendorf

- computer animation pipeline and render farm initiated in 2013

- a user-space queue, called Chessyq, scheduled frame rendering across

the DPA desktop linux computers and desktop linux computers in the

McAdams 110 computer lab (later expanded to other computers)

- at its peak, cheesyq rendered 12,000 frames per day sustained

for a week or so

- Data Intensive Computing Ecosystems (DICE) lab

- led by Amy Apon

- yoda, ca. 2015

- 1 primary Dell Precision 330, 2.4 Intel Core 2 Quad processor and 4 GiB

of memory, dual port Gigabit Ethernet card

- 12 client work stations: Sun Ultra20-M2, Dual AMD Opteron, 2 GiB of RAM,

three dual-port Gigabit Ethernet card per station

- 2 Pica8 Pronto 3290 48-port Gigabit Ethernet switches,

with OpenFlow enabled

- holocron, 2015

- designed as a Hadoop machine

- 18 nodes, each with a dual 10-core Intel Ivy Bridge at 2.2 GHz,

256 GiB memory, 8 x 1 TB local HDD, 8 x 6 TB storage array, and

10 Gbps Ethernet connection

- the nodes are connected to a 40Gbps switch with SDN support

- Holocron was hosted in Clemson University's data center and

operational from 2015-2018, then it was folded into Clemson

University's portion of CloudLab.

Yash Mishra,

Yuheng Du,

Lili Xu, and

Jason Alexander

in front of holocron.

Photo courtesy of Linh Ngo.

- ScaLab (Scalable Computing and Analytics Lab)

- led by Rong Ge

- Ivy, 2016

- two login nodes and eight compute nodes

- each node has two 12-core Intel Xeon

CPU E5-2670v3 @ 2.30 GHz processors and 128 GiB DDR4 DRAM

evenly distributed between two NUMA sockets

- each node additionally has 1 Nvidia Tesla K40 GPU accelerator

and 1 Intel Knight's Corner Xeon Phi accelerator

- DeepGreen, 2020

- five nodes from the original Ivy cluster

placed in a liquid immersion cooling pod

- a server node has two Nvidia TITAN V GPU cards

- References and further discussion

Mathematics

- Floating Point Systems (FPS) T-20 16-node hypercube, 1986

- each node contained:

- a 15 MHz T414 Transputer with 2 KiB of on-chip SRAM

- a vector processor capable of running 16 MFLOPS

- a multiported 1 MiB local memory module

- four communication links, each capable of being

multiplexed four ways, to provide a

hypercube interconnection network

- a DEC MicroVAX II running ULTRIX served as the front end

- axiom - IBM OpenPower cluster, 2005

- 48 compute nodes, 4 interactive nodes, and a head node,

each with two dual-core Power5 64-bit processors @ 1.65 GHz, 4 GiB of

memory and 80 GB of disk

- connected via 2 GB/sec Myrinet

- 550 GB of shared RAID10 disk

- Red Hat Enterprise Linux 5 Advanced Server, GNU and IBM XL

compilers, torque and maui queue manager

- References and further discussion

Physics and Astronomy

Office for Business and Finance

- IBM S/360 Model 20, 8 KiB, 1966

- located in basement of Tillman Hall with separate data processing staff

- memory increased to 16 KiB

- became a Remote Job Entry (RJE) site for the IBM S/360

Model 50 in the Computer Center in 1971

- retired in 1978 and replaced by an IBM 3777-II communications terminal,

which had a card reader and line printer

- References and further discussion

Center for Advanced Engineering Fibers and Films (CAEFF)

- W.M. Keck Visualization Lab in Rhodes Hall

- Silicon Graphics Onyx equipped with the Reality Engine 2 (RE2)

graphics subsystem, 1994

- two 150 MHz MIPS R4400 processors, 128 MiB main memory,

and 4 GB of disk

- RE2 subsystem contains 12 Intel i860XP processors that

serve as Geometry Engines

- Silicon Graphics Onyx2 Infinite Reality Dual Rack,

Dual Pipe System (Reality Monster), 1997

- known as "mickey"

- 8 raster managers and 8 MIPS R10000 processors,

each with 4 MiB secondary cache

- 3 GiB of main memory and 0.5 GiB of texture memory

Andrew Duchowski with head-mounted

displays and Onyx2 system in back right.

Photo courtesy of Andrew Duchowski.

- equipment located in basement of Riggs Hall

- 64-node cluster, 2000

- 265-node distributed rendering system using

commodity components with tiled viewing screen, 2002

- generates images of 6,144 x 3,072 pixels and projects them across a

14' x 7' display at 30 frames per second

-

each node has a 1.6 GHz Pentium 4 CPU,

a 58 GB IDE drive, and dual Ethernet cards (NICs)

- each of the 240 nodes used for geometry generation and rendering

have 512 MiB main memory, an Nvidia GeForce4 TI 4400 graphics card,

an Intel e100 100 Mb NIC, and a 3Com 905c 100 Mb NIC

- each of the 24 nodes used for display have 1 GiB main memory,

a Matrox G450 graphics card, a 1 Gb Intel e1000 NIC and a

100 Mb Intel e100 NIC

- all nodes are connected via a dedicated, eXtreme Networks,

BlackDiamond, Gigabit Ethernet switch

- the tiled screen is driven by a 6 x 4 array of Benq 7765PA DLP projectors

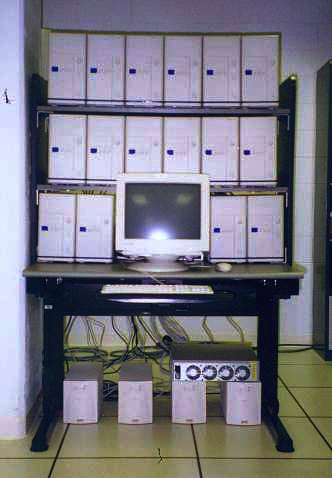

Distributed rendering system racks.

Photo courtesy of Robert Geist.

- References and further discussion

Clemson Center for Geospatial Technologies (CCGT)

- GalaxyGIS Cluster

- This is a collection of over 30 Windows computers, providing over

700 cores, with installed GIS programs and uses HTCondor to distribute

GIS jobs for high throughput processing on the available computers.

- References and further discussion

Computational Center for Mobility Systems (CU-CCMS)

- initial cluster, 2007

- Arev cluster, 2008

- 430 SunBlade x6250 nodes with a total of 3,440 processing cores

- 20 Gbps Infiniband

- SLES 10 SP1 64bit

- 35 Tflops total computing power

- listed in November 2008 as the 100th fastest computer in the world

in the

TOP500 list

- Aris - one of two Sun Fire E6900 servers, 2008

- 24 quad-core UltraSparcIV+ processors

- 384 GiB shared memory

- Solaris 10

- Sun E25k Server, 2008

- 144 processing cores

- 680 GiB RAM

- References and further discussion

Clemson University Genomics Institute (CUGI)

Photo of CUGI computer room, 2006.

Photo from Internet Archive.

- "ten-node beowulf cluster of Linux computers", 2000

- 32-node cluster ("Guanine"),

each node contains two 1 GHz Pentium III processors

- "138 processor UNIX cluster"

[could this be a reference to the shared 128-node Cytosine cluster

in the Minigrid?]

- Mac cluster is that block of 1U silver-colored machines in the black racks

- References and further discussion

Clemson University Institute for Human Genetics (IHG)

- Secretariat cluster, 2019

- four compute nodes with 40 cores and 192 GB RAM each

- two high memory compute nodes with 44 cores with 1.54 TB RAM each

- dedicated HA-NFS with 500 TB of storage

- located at the Self Regional Hall in Greenwood, SC

- References and further discussion

Interdisciplinary

- Nvidia DGX-2, 2019

- ONR provided a $316,000 grant for six faculty members to acquire

an Nvidia DGX-2 server, which has dual Intel Xeon Platinum 8168,

2.7 GHz, 24-cores, and sixteen Nvidia Tesla V100 GPUs. The

server is able to achieve processing speeds of 2 petaFLOPs.

- References and further discussion

References (with Further Discussions)

Computer Center

- Merrill Palmer of the Department of Mathematics served

as the first Director of the Computer Center. A list of

Directors, and later Vice Provosts of Computing and Information

Technology, and Chief Information Officers is given by Jerry Reel

in volume 2 of The High Seminary,

which I have corrected below and extended to the present:

- Merrill C. Palmer, 1961-1975,

obituary

- Russell S. Schouest, (acting) 1975, (permanent) 1975-1977

- Thomas E. Collins, (acting) 1977-1978

- Christopher J. Duckenfield, 1978-2004,

obituary

- David B. Bullard, (interim) 2004-2006

- James R. Bottum, 2006-2016

- Brian D. Voss, (interim) 2016-2017

- Russell Kaurloto, 2017-2021

- Brian D. Voss, (interim) 2021-2022, (permanent) 2022-present

- notes on corrections and dates:

- Palmer, 1961-1975, - not sure why Reel lists

Palmer's start as 1966 instead of 1961, perhaps because of the arrival of

the S/360 Model 40 mainframe in 1966;

resignation announced in Compusion Newsletter, March 20, 1975

- Schouest, (acting) 1975, (permanent) 1975-1977 -

see "Director Named," The State, May 29, 1975, p. 3-B;

resigned August 18, 1977

- Collins, (acting) 1977-1978 - Reel misspelled

his last name and should have indicated his temporary status;

resigned March 16, 1978

- Duckenfield, 1978-2004 -

see

"Head of Computer Center Named," Clemson University Newsletter,

vol. 17, no. 5, April 1, 1978, p. 2 (page 345 of the pdf); passed away

on April 21, 2004

- Bullard, (interim) 2004-2006 - retired August 2006

- Bottum, 2006-2016 - see the

announcement released May 10, 2006, that Jim has been named

Chief Information Officer and Vice Provost for Computing and

Information Technology effective July 17, 2006

- Voss, (interim) 2016-2017 - named as interim in July 2016

- Kaurloto, 2017-2021 - began the role July 17, 2017

- Voss, (interim) 2021-2022, (permanent) 2022-present -

named as interim in May 2021 and permanent in February 2022

- The Computer Center became part of the Division of Computing

and Information Technology (DCIT) in 1985, which was renamed as

Clemson Computing and Information Technology (CCIT) in 2007.

See Chris Duckenfield, "Director's Letter," DCIT Newsletter,

vol. 9, no. 1, Fall 1985, p. 1, in which he states that DCIT will

be an umbrella organization for DAPS, DISD, and the Computer Center.

Duckenfield was promoted a year later from Director of DCIT to

Vice Provost of Computing and Information Technology; see

"Duckenfield Named Provost,"

The Tiger, vol. 90, no. 9, October 24, 1986, p. 7.

See also

the 2007 letter

from Provost Dori Helms discussing the name change to CCIT.

- The university's first computer was initially located in Poole Hall

(also known as the P&A or P&AS building for Plant and Animal Science)

while Martin Hall was being constructed. Once Martin Hall was finished,

the computer moved there in 1962. After five years in Martin Hall,

the Computer Center operations moved to the basement of Poole Hall,

where they stayed for almost twenty years.

Reel describes the planning (and state politics) for the new

Information Technology Center (ITC) building on Highway 187

in the section "Services to Faculty and Staff," pp. 277-281, in volume 2

of his history of the university.

The Computer Center moved its main operations to the ITC in late 1986

but still kept some offices and facilities on campus (e.g.,

the VAXes moved from Riggs Hall to Poole Hall). See

this page for a list of the current CCIT locations.

- Pearce Center student interns prepared a

CCIT History Project Timeline that extends through 2006.

See page 12 of the

2014-2015 Pearce Center Annual Report.

- See a list of the various

Computer Center newsletters from the 1970s to the 2000s.

The inside cover of newsletters from mid-1975 to 1994 typically

included a brief description of the major computer systems.

Additionally, a yearly Introduction to the Clemson University

Computer Center (later titled Introduction to Clemson University

Computer Services) was published each academic year from

1979-1980 to 1992-1993; these contain a more extensive

description of facilities, often including a list of the types

and number of disk drives and tape drives. A set of pamphlets

and later brochures was published as DCIT Computer Services

from 1994 to academic year 2006-2007. This material is available

in the Clemson University Libraries Special Collections and Archives.

- The

Graduate School catalogs starting in 1964-1965 (but skipping

1967-1968, 1968-1969, and 1969-1970) as well as the

Clemson Student Handbook starting in 1969-1970 include a section

that describes the computer center and available computers.

Note that the Graduate School Catalog and Student Handbook switch

section titles from Computer Center to DCIT Computing Facilities

starting in 1987-1988 and typically become less specific as to the

computer models starting in 1992-1993.

- An online page listing "Large Computer Systems" at Clemson

University was published from 1997 to 2005; see the

first capture in 1997.

-

CCIT video tour of ITC and Network Operations Center (NOC), ca. 2008

- This

CITI Infrastructure github page dates from 2017.

First Computer

- This was a larger and more powerful desk computer than the

popular LGP-30 and had an 8,008-word magnetic drum for main memory.

I have seen some instances of university documents listing

the memory size as 16K or 16KB, but I believe those to be mistakes.

None of the source material about the RPC-4000 available

elsewhere on the web gives that as an option. E.g., see

page 7 of the

RPC-4000 Features Manual. Also, note that the RPC-4000

instruction format used 13 bits for a memory address

(page 10), so the memory addressing limit was clearly 8K 32-bit words.

-

"New Computer System Aids Clemson Research,"

The State, April 17, 1961, p. 7-A:

Because it is transistorized, the Clemson computer occupies little

more space than two ordinary office desks, but provides remarkable

operating speeds and memory capacity. It is capable of 230,000

operations per minute. Its input and output speeds range as high

as 500 charcters-per-second. Both alpabetic and numeric information

may be stored and processed through the use of its 8,008-word

magnetic drum memory center or "brain."

...

The Clemson computer is the 14th of its type produced by the

manufacturer and one of five located in the South. Three of the

other four are at the Huntsville, Alabama Redstone Arsenal, and one

is in use at an Atlanta aircraft plant.

- see also:

-

RPC 4000 entry in Martin Weik,

"A Third Survey of Domestic Electronic Digital Computing Systems,"

Report No. 1115, Ballistic Research Laboratories,

Aberdeen Proving Ground, Maryland, March 1961, pp. 834-835.

-

"All Transistor New Computer Just Installed,"

The Tiger, December 1, 1961, p. 1.

- "History of Computing at Clemson - Part I,"

Computer Center Newsletter, vol. 1, no. 1, March 1978, pp. 4-8:

Clemson owes its first steps into the future and the world of

electronic data processing to Dr. Al Hind of the Mathematics

Department. During the 1950's Dr. Hind had been teaching at

Clemson during the school year and working summers at the

Naval Research Lab where he had become familiar with the uses

and capabilities of the newly developed high speed computing

machines we call computers. He saw Clemson's need for one and

went to Jack Williams. Dean Williams, who was Dean of the College,

was also a forward looking man and he immediately set up a

faculty committee to study the idea. That was in the spring of

1959. That committee's report dated May 9, 1959 recognized the

need for a computer facility at Clemson and suggested the

acquisition of an LGP-30, a small computer made by the

Royal MacBee [sic] Company.

Before arrangements could be made to purchase an LGP-30 and

set up a computer center at Clemson, several new machines had

appeared on the market causing Dean Williams and members of the

Agricultural Experiment Station Staff to request a review of the

information regarding electronic computers.

As a result, a second committee was established on March 2, 1960

and the rest is history. By then computers were into the second

generation. No longer were they huge bulky vacuum tube machines

requiring rooms full of complex air-conditioning machinery.

Instead they were transistorized, taking up a fraction as much

space and requiring little or no special installation.

The committee visited many installations in Georgia and South

Carolina and talked to representatives of most of the companies

which marketed computers at the time. IBM, which had gotten its

foot in the door at Clemson by supplying the tab equipment to

the administration, wanted the computer installation at Clemson

enough to offer to give the new center an IBM 650 vacuum tube

machine, but the committee (with much prodding from the EE

department) insisted on a transistorized machine.

The choices were narrowed down to the RPC 4000 made by Royal McBee,

and IBM's 1620. IBM, with its offics in Greenville, offered

superior maintenance services (Royal McBee's closest office was

in Atlanta) but the final choice was the RPC 4000 with its

16,000 byte [sic] drum memory. The 1620 has less than half that

amount of memory and at the time IBM said that they would

definitely not be increasing its memory capacity. As a result,

the final report to Dean Williams dated June 9, 1960 recommended

the purchase of an RPC 4000 computer system at a cost of some

$50,000. Dean Williams and President Edwards took the request to

a special session of the legislature in Columbia and the Clemson

College Computer Center was born.

The center was officially established in February of 1961 with

one and a half employees: a half time Director, Mr. Merrill

Palmer, who spent his other half teaching in the Math Department,

and a full time Secretary. The RPC 4000 arrived and was installed

in April 1961.

An interesting footnote to this part of the story is that three days

after the legislature approved the purchase of the RPC 4000, IBM

announced the addition of more memory to its 1620.

- "History of Computing at Clemson - Part II,"

Computer Center Newsletter, vol. 1, no. 2, April 1978, pp. 5-8.

[subtitled "The Life and Times of the RPC 4000 at Clemson"]

- "Yesteryear Computing,"

DCIT Newsletter, vol. 10, no. 2, Winter 1987, pp. 21-22.

- Jürgen Müller,

"The LGP-30's Big Brother,"

e-basteln, no date.

- The computer was first installed in Poole Hall.

In 1962, the computer moved to the new Martin Hall.

See "New Clemson Complex," The State, September 4, 1962.

- For a comparison with early computers at other regional schools,

see a review of

college and university computers in the Southeast US, 1955-1961.

Mainframes

- The

1966-1967 Graduate School Catalog states on page 17,

"On July 1, 1966 the Computer Center will install an IBM/360,

Model 40, with 65,000 bytes of core storage."

The IBM S/360 Model 40 and the rest of the Computer Center

operations needed more room than available in Martin Hall,

so the Computer Center moved to the basement of Poole Hall

in the summer of 1967.

See the minutes of the Research Faculty Council meeting of

April 18, 1967, in the newsletter collection,

Clemson Newsletter, 1965-1967, page 389 of the pdf:

Mr. M. C. Palmer, Director of the Computer Center, spoke to

the Council on operations and operational needs of the

Computer Center. He commented that plans are being implemented

to increase the operational time of the computer, to purchase

additional equipment and to hire needed personnel for programming

work. He stated that several machines are being ordered by

various divisions of the University and that key punching

facilities should be solved by September. He stated that the

Computer Center was scheduled to be moved to the P & AS

Building in July.

-

President's Report to Board of Trustees, 1967-1968,

states on page 15:

During the summer of 1968 the second major addition was made

to its IBM System/360, Model 40 computer. This addition,

partially supported by a grant from the National Science

Foundation, provides the largest memory that can be used

with the Model 40, and input/output equipment to provide

the most efficient utilization of that memory.

The

President's Report to Board of Trustees, 1968-1969,

states on page 20, "In July, 1968, the core storage of

the computer was increased from 131,072 bytes to 267,144 bytes."

See also "Yesteryear Computing,"

DCIT Newsletter, vol. 10, no. 2, Winter 1987, pp. 21-22.

-

Clemson Newsletter, vol. 10, no. 4, October 1, 1970, page 2:

The central processing unit, an IBM 360, has been upgraded to

model 50, which operates three times faster than the model 40.

See also "History of Computing at Clemson - Part III,"

Computer Center Newsletter, vol. 2, no. 2, October 1978, p. 11.

-

A January 18, 1973 thesis by Saifee Motiwalla cites computations run

on Clemson University's Model 155.

-

John Peck and Francis Crowder,

"A Public Health Data System,"

National Computer Conference, 1974, page 77-80:

During January 1974 Clemson University will acquire

an IBM 370/158 and will run VS2.

- See several pictures from the computer room from 1975-1976 on

the pages entitled,

"Do Not Fold, Bend, or Mutilate," and "Computers Control Campus,"

in the

1976 TAPS yearbook, pp. 118-121.

-

In 1976, Clemson University bought a used Model 165 from Gulf Oil

Corporation and contracted with IBM to install dynamic address

translation (DAT) hardware before the system was shipped to Clemson.

A Model 165 with DAT was designated as a Model 165-II, and the DAT

facility allowed it to run the newest IBM operating systems at the

time. When the anticipated high-end IBM 3033 mainframe was announced

in 1977, Russell Schouest wrote about the decision to buy a used

Model 165 and upgrade it instead of buying a new and more expensive

Model 168 in "The IBM Garage Sale," Compusion Newsletter, vol. 5,

no. 10, May 1, 1977, p. 2:

Clemson's decision to buy a 370/165 on the used market at the time

we did was an attempt to bridge through this uncertain period in the

market place. Since the availability of the 3033 is still 1 - 1½

years away (and we may yet see the 258, 268, and 278), the 370/165

results in minimum financial exposure per unit of computing power

while waiting for the complete line to be available and announced.

A purchase value of "about four million dollars" is cited in

Chester Spell,

"Expanded Computer Complex Pays for Itself,"

The Tiger, vol. 70, no. 18, February 11, 1977, pp. 5, 11.

An installation schedule for the 165-II is given in the Computer Center

Bulletin, vol. 1, no. 39, November 5, 1976. The article,

"Center Undergoes Renovation,"

published in The Tiger, October 22, 1976, describes some of the

renovations that were done before the Model 165-II could be installed.

Mainframe computers typically required substantial HVAC cooling, space for

cabling, and special electrical power connections.

The Model 165 additionally required liquid cooling. (See page 11 of

A Guide to the IBM System/370 Model 165, November 1970.)

The later 3033 and the 3081 likewise required liquid cooling.

Starting with the XL60 in 1986, the mainframes

have been smaller in footprint and air cooled.

- See "3033 Installation," Computer Center Newsletter,

vol. 3, no. 1, September 1979, pp. 2-8.

This particular machine had been rented by the University of

South Carolina in hopes of buying it. However, because of a

procurement bidding appeal by Amdahl Corporation, the South

Carolina Budget and Control Board ruled that USC had to buy

a less expensive Amdahl 470 computer instead. In contrast,

the state approved the purchase of the IBM 3033 for Clemson

University since the IBM computer had passed the benchmark

designed by DCIT, and specified in the RFP, but the Amdahl

computer had not. The timing of these decisions allowed IBM

to sell the rented 3033 in Columbia to the state for installation

at Clemson University and also reduce the purchase cost based

on rental credits for the months that it had been located at USC.

See "Controversial IBM Computer at Clemson,"

The State, June 12, 1979, p. 1.

The complexity of the benchmark for the 1978-1979 mainframe

procurement is described in an article by Susan Keasler,

"New Computer Increases Speed, Capacity,"

The Tiger, vol. 73, no. 1, August 24, 1979, p. 12:

According to Dr. Christopher Duckenfield, director of the

department of computer services, the first draft of the RFP

(request for proposal) containing specifications and

requirements of the computer was submitted to the State

Budget and Control Board in May of 1978. After several

changes and modifications to the original, the final draft

was submitted in September.

Three companies, Itel, Amdahl,

and International Business Machines (IBM), bid on the RFP

of the computer, though neither [sic] company passed

the benchmark required by the department. A third, further

modified RFP was submitted in December. The same three

companies bid again, but IBM only in March of this year

succeeded in passing the benchmark for final specifications.

Charles H. Burr, director of the state's Computer Systems

Management, said that Clemson's benchmark was complicated

because it involved simulated interaction between 700

computer terminals and the computer while the unit ran a

program. The benchmark was developed from computer experience

and proposed use, said Dr. Arnold Schwartz, dean of

graduate studies and research at Clemson University.

- For the 1982 mainframe procurement, IBM sucessfully

benchmarked an IBM 3081-K. National Advanced Systems (NAS)

had initially bid an AS/9080 but declined to run the

benchmark. See "New Computer," Computer Center Newsletter,

vol. 5, no. 4, June 1982, p. 22; "Director's Letter,"

Computer Center Newsletter, vol. 6, no. 1, Fall 1982, p. 1;

and, Clemson University Benchmark Results, October 15, 1982,

25pp. This benchmark simulated an average of 1500 TSO users.

The procedural documentation and appendices for the benchmark

totaled 39 pages.

- Don Fraser, "NAS AS/XL-60 Replaces IBM 3081K,"

DCIT Newsletter, vol. 10, no. 2, Winter 1987, pp. 3-6.

- Ami Ghosh, "Vector Facility on the NAS AS/XL-V60,"

DCIT Newsletter, vol. 11, no. 1, Fall 1987, pp. 30-31.

Subsequent research using the vector facility included:

-

An implementation of vectorization for image processing.

See John Trout, Jr., "Vectorization of Morphological

Image Processing Algorithms,"

M.S. Thesis, Department of Mathematical Sciences,

Clemson University, July 1988.

- A performance comparison between the NAS AS/XL-V60 and a Cray X-MP/48.

See Edward Jennings, Jr., "Approximate Solutions to an Initial Value

Problem in Functional Differential Equations: A Comparison of Scalar

and Vector Code Performance on the Cray X-MP/48 and the NAS AS/XL-V60,"

M.S. Thesis, Department of Mathematical Sciences,

Clemson University, August 1988.

-

An implementation of vectorization for ray tracing. See

Nancy Cauthen, "Vectorization of a Ray Tracer

using a Pipelined Dataflow Strategy," M.S. Thesis,

Department of Mathematical Sciences,

Clemson University, June 1989.

-

Porting version 2g.6 of Berkeley's SPICE circuit simulator

to run on the NAS AS/XL-V60. See Keith Thoms, "The Development

and Implementation of a Methodology for Running SPICE on

Computers Utilizing Vector Architectures," M.S. Thesis,

Department of Electrical and Computer Engineering,

Clemson University, August 1990.

- The mainframe moved to the Information Technology Center (ITC)

building in Clemson Research Park in November of 1987. The Park is

located on SC Highway 187, nine miles from campus. See

C.J. Duckenfield, "The Big Move," DCIT Newsletter, vol. 11, no. 2,

Winter 1988, pp. 3-7.

- The Pilot 25 is discussed by Jim Blalock in

"MVS Mainframe or Enterprise Server,"

computing@clemson.edu Newsletter, vol. 3, no. 3, Spring 1998, page 10.

- The IBM z800 is discussed in

"New Mainframe Installation,"

computing@clemson.edu Newsletter, vol. 8, no. 2, Winter 2002-2003, page 4.

VAX and Sun Servers

-

Remarks by Dr. Bill L. Atchley, President of Clemson University,

to the General Meeting of the Faculty and Staff

Wednesday, August 18, 1982, in Tillman Hall Auditorium, which appear in the

Faculty Senate Minutes, May 1982 - October 1982 Meetings,

on page 35 of the pdf:

Industry has responded in a big way to our requests for help to

offset the effects of decreased state support. For example, the

Computer Center has received more than $1 million worth of computer

equipment this year from Digital Equipment Corporation. This includes a

VAX computer to go along with one we've purchased. The VAX computers

will be installed by the Computer Center in Riggs Hall and will greatly

increase our capabilities in computer graphics and research. The

computers will be a big benefit to engineering, computer science and

the University as a whole.

-

From an announcement in the

Campus Bulletin,

The Tiger, vol. 76, no. 13, December 2, 1982, page 6:

The Microcomputer Club will meet Tuesday,

Dec. 7, at 6:30 p.m. in the Riggs VAX computer

room (room 10). VAX graphics, hardware, and

software will be demonstrated.

Room 10 was called the "graphics and research facility". See

Clemson Newsletter, October 12, 1983, page 3 (page 114 of the pdf):

Center staff members will also be available to show and tell you

all about the University's own VAX-ll/780s.

The two-day open house will be Oct. 19-20, 9 a.m.-4:30 p.m., at

the Center in the P&AS Building, and at the graphics and research

facility in Riggs Hall. A bus will shuttle visitors between

the two buildings at 10-minute intervals, says Stegall.

See also the advertisement for the Computer Center Open House

on the bottom right of page 27 of

The Tiger, vol. 77, no. 9, October 13, 1983.

- From the

Annual Report of the Clemson Board of Trustees, 1982-1983, page 65:

The remote site in the basement of Riggs Hall was completed in August

1983. This facility now houses the two Digital Equipment Corporation

VAX 11/780 computer systems acquired last year. These systems are used

to: run jobs which, by virtue of their size or run-time, cannot be run on the

IBM system without severely disrupting other users of the system; access

graphic terminals and other devices not accessible from the IBM system;

communicate with mini- and microcomputer systems (including data

collection devices) not readily accessible from the IBM system.

The Computer Center has been working to provide by January 1984

videotex services to Clemson University students, faculty and staff. The

videotex system will facilitate the distribution of information about

University events through the use of low-cost computer terminals. The

University's Department of Information and Public Services is working

with Computer Center staff to develop graphics and information

displays.

- 1981 in

CCIT History Timeline:

Back-to-back grants from Digital Equipment Corp. (DEC)

enable the remote computing facility in Riggs Hall to be

converted to a high-tech computer graphics center. A 600-line

plotter, two graphics subystems and several terminals will be

supported by a DEC VAX/VMS computer, moving Clemson beyond

its traditional IBM product base.

-

Chris Duckenfield, "Director's Letter," and Richard Nelson,

"New Graphics Center," Computer Center Newsletter, vol. 5,

no. 2, December 1981, p.1 and p. 26, respectively.

- Christine Reynolds, "Renovation of Riggs Remote,"

Computer Center Newsletter, vol. 7, no. 2, Winter 1983, pp. 3-6.

- In 1985, the DCIT network diagram given in

"The Clemson University Computing Network 1985-1986"

shows three 780s and two 750s.

However, in that same year, Andrew Smith and Craig DeWitt

describe four 780s and one 750 in

"Videotex: a new area for user services,"

ACM SIGUCCS Newsletter,vol. 15, no. 2, 1985, pages 9-13:

The Clemson system has as its host system a DEC

VAX-11/750 minicomputer connected vla DECNET to four

VAX-11/780 minicomputers.

See also Richard Nelson, "The Clemson University Academic Computing Network,"

DCIT Newsletter, vol. 9, no. 3, Spring 1986, pp. 4-5.

- The DEC VAX 8650 was ordered in 1986 according to page 61 in the

Annual Report of the Clemson Board of Trustees, 1985-1986.

Also Todd Endicott,

"Computer Center Receives Boost,"

The Tiger, vol. 80, no. 5, September 26, 1986, page 7.

Note that he calls it Prism.

- In 1988 a diagram from the Alexander and Meyer paper lists:

- VMS: 750, two microVAX

- VAXcluster under VMS: 8820, 8650, 8600 (with 8600 supporting CUFAN)

- Ultrix: 750, two microVAX (with one as front end to T-20)

I believe that this 1988 diagram is in error since hubcap

would be a 780 and possibly the 8810 at this point.

See also "Clemson University Division of Computing and

Information Technology VAX Network August 1988,"

DCIT Newsletter, vol. 12, no. 2, November 1988, pp. 10-11.

- Janet Hall,"VAX Systems Relocation," DCIT Newsletter,

vol. 11, no. 3, Spring/Summer 1988, p. 10.

- In 1988, a DEC equipment grant helped buy the 8810 and the 8820.

See Jenny Munro, "Clemson Gets Two Digital Computers,"

Greenville News, October 7, 1988, and

S. Dean Lollis,

"Digital Gives Computer Grant,"

The Tiger, vol. 82, no. 7, October 7, 1988, page 9.

- Jay Crawford, "Changes to the Vaxcluster,"

DCIT Update Newsletter, vol. 14, no. 1, September 1990, p. 7.

- Richard Nelson remembers:

The first VAX was temporarily installed in the computer room

in the P&AS building (Poole Hall) while a new computer room

was being prepared in Riggs Hall. Then all the VAXes were

moved to Riggs Hall. Some time after the mainframe was moved

to Wild Hog Road, the DEC systems were moved back to Poole Hall.

also

With the addition of an 8650 to the cluster, we started to

retire the 11/780, but retained one of them and installed

DEC Ultrix on it. That was the first hubcap.

That was eventually replaced with a DECstation (I forget the

model number), which was DEC's first entry into the 64 bit

market using MIPS processors. It also ran Ultrix. (They

weren't allowed to call it UNIX at the time.)

Note that Mike Marshall remembers that a 750 was the first hubcap,

and thus the 780 would have been the second.

- See an MP4 video of the

DEC VTX system at Clemson University, ca. 1986,

which includes a demonstration of CUFAN,

courtesy of Richard Nelson.

- DoD Internet Host Table, May 26, 1987

HOST : 26.0.0.80 : TECNET-CLEMSON.ARPA : NCR-TOWER-1632 : UNIX : X.25 :

HOST : 192.5.219.1 : HUBCAP.CLEMSON.EDU : VAX-11/780 : ULTRIX : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 192.5.219.2 : PRISM.CLEMSON.EDU : VAX-8650 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP

HOST : 192.5.219.3 : EUREKA.CLEMSON.EDU : VAX-8600 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

- DoD Internet Host Table, October 3, 1988

HOST : 26.0.0.80 : TECNET-CLEMSON.ARPA : GOULD-9005 : UNIX : X.25 :

HOST : 192.5.219.1 : HUBCAP.CLEMSON.EDU : VAX-11/780 : ULTRIX : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 192.5.219.2 : PRISM.CLEMSON.EDU : VAX-8650 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 192.5.219.3 : EUREKA.CLEMSON.EDU : VAX-8600 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

- DoD Internet Host Table, November 30, 1989

HOST : 26.4.0.80 : TECNET-CLEMSON.ARPA,TECNET-CLEMSON.JCTE.JCS.MIL : GOULD-9005 : UNIX : X.25 :

HOST : 130.127.8.1 : HUBCAP.CLEMSON.EDU : VAX-8810 : ULTRIX : TCP/TELNET,TCP/FTP,TCP/SMTP,UDP/DOMAIN :

HOST : 130.127.8.2 : PRISM.CLEMSON.EDU : VAX-8650 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 130.127.8.3 : EUREKA.CLEMSON.EDU : VAX-8600 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 130.127.8.11, 130.127.16.1 : ENG.CLEMSON.EDU,OMNI.CLEMSON.EDU : SUN-4/280 : UNIX : TCP/TELNET,TCP/FTP,TCP/SMTP,UDP/DOMAIN :

HOST : 130.127.8.80 : CS.CLEMSON.EDU : SUN-4/280 : UNIX : TCP/TELNET,TCP/FTP,TCP/SMTP,TCP/FINGER :

HOST : 130.127.8.82 : TECNET.CS.CLEMSON.EDU : SUN-1/180 : UNIX : TCP/TELNET,TCP/SMTP,TCP/FTP :

[a typo? - TECHNET.CS should be a SUN-3/180, as in March table?]

- DoD Internet Host Table, March 12, 1990

HOST : 26.4.0.80 : TECNET-CLEMSON.ARPA,TECNET-CLEMSON.JCTE.JCS.MIL : GOULD-9005 : UNIX : X.25 :

HOST : 130.127.8.1 : HUBCAP.CLEMSON.EDU : VAX-8810 : ULTRIX : TCP/TELNET,TCP/FTP,TCP/SMTP,UDP/DOMAIN :

HOST : 130.127.8.2 : PRISM.CLEMSON.EDU : VAX-8650 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 130.127.8.3 : EUREKA.CLEMSON.EDU : VAX-8600 : VMS : TCP/TELNET,TCP/FTP,TCP/SMTP :

HOST : 130.127.8.11, 130.127.16.1 : ENG.CLEMSON.EDU,OMNI.CLEMSON.EDU : SUN-4/280 : UNIX : TCP/TELNET,TCP/FTP,TCP/SMTP,UDP/DOMAIN :

HOST : 130.127.8.80 : CS.CLEMSON.EDU : SUN-4/280 : UNIX : TCP/TELNET,TCP/FTP,TCP/SMTP,TCP/FINGER :

HOST : 130.127.8.82 : TECNET.CS.CLEMSON.EDU : SUN-3/180 : UNIX : TCP/TELNET,TCP/SMTP,TCP/FTP,UDP/DOMAIN :

- In 1992, a Department of Computer Science internal document listed

the VAX equipment operated by the computer center as

an 8810 and a VAXcluster of 8820, 6000-410, and 8650.

- Cathy Brown and Joe Swift, "VAX Computing Improvements,"

DCIT Update Newsletter, vol. 16, no. 4, March 1993, p. 25.

- Jay Crawford, "Hubcap's Future OS: DEC's OSF/1,"

DCIT Update Newsletter, 17, no. 2, November 1993, p. 8, and

Joe Swift, "Under Construction,"

DCIT Update Newsletter, 17, no. 2, November 1993, pp. 8-9,

which compares the DEC VAX 8810 and DEC 3000-500.

- Jay Crawford, "OpenVMS VAXcluster Sizes Down and Speeds Up,"

DCIT Update Newsletter, vol. 17, no. 4, March 1994, p. 9.

- Oracle running on a SPARCserver 10 is described in Kevin Batson,

"The Clemson University Data Warehouse,"

DCIT Update Newsletter, vol. 17, no. 5, May 1994, pp. 11-12.

-

"Hubcap (UNIX) Machine Upgraded,"

computing@clemson.edu Newsletter, vol. 2, no. 1, Fall 1996, p. 6.

- In 1996, the Computer Center announced the end of life for the

VMS systems as summer 1997. See Dave Bullard,

"Many Products and Protocols No Longer Considered Strategic,"

computing@clemson.edu Newsletter, vol. 2, no. 2, Fall 1996, pp. 4-5.

- Helios and a new hubcap were acquired in 1997. See:

- Chris Duckenfield,

"Clemson University Research Foundation Purchases

Two Supercomputers for University Research,"

computing@clemson.edu Newsletter, vol. 3, no. 1, Fall 1997, page 1.

- Cynthia Kopkowski,

"Research Foundation Funds New Supercomputers from Royalties,"

The Tiger, vol. 91, no. 2, September 5, 1997, page 10.

- Dave Bullard,

"High Performance Computing Opportunities,"

computing@clemson.edu Newsletter, vol. 4, no. 1, Fall 1998, page 5.

- Mike Marshall, "Yet Another New Hubcap (YANH),"

computing@clemson.edu Newsletter, vol. 3, no. 3, Spring 1998, p. 11.

- A Sunfire V880 is identfied as the new email server in

"Email Updates,"

computing@clemson.edu Newsletter, vol. 8, no. 2, Winter 2002-2003, page 2,

and

"Email Updates for Fall 2003,"

computing@clemson.edu Newsletter, vol. 9, no. 1, Fall 2003.

Condor

- Sebastien Goasguen,

"Windows Condor Pool at Clemson University,"

Distributed Organization for Scientific & Academic Research (DOSAR)

Workshop, April 2008.

- Cohen Simpson,

"Grid Computing Tackles Issues,"

The Tiger, vol. 102, no. 16, September 19, 2008, pp. 1, 7.

- Dru Sepulveda,

"Deploying and Maintaining a Campus Grid at Clemson University,"

MS Thesis, Clemson University, 2009.

- See also

Douglas Thain, Todd Tannenbaum, and Miron Livny,

"Distributed Computing in Practice: The Condor Experience,"

Concurrency and Computation: Practice & Experience, vol. 17, no. 2-4,

February 2005, pp. 323-356.

(preprint at Wisconsin)

- In addition to providing CPU cycles for Clemson University

users, Condor also provided many CPU cycles for marine biology research

for Dr. Robert Chapman with the South Carolina Department of Natural

Resources and for projects for the World Community Grid Web, including

cancer research and Nutritious Rice for the World.

- The usefulness of the Condor resources were diminished when

energy-saving initiatives led to the eventual powering off of the

lab computers after-hours. In addition, the emergence of the Palmetto

cluster and reduced CCIT lab resources led to the eventual demise

of the Condor system in general use at Clemson University.

However, HTCondor is currently used by the GalaxyGIS Cluster

in the Clemson Center for Geospatial Technologies.

Palmetto

-

In 2006, when Jim Bottum joined Clemson University as CIO and

Vice Provost, he emphasized strategic partnerships between CCIT

and Clemson University researchers. The funding and the research

results that have come from these combined efforts further

propelled the university into national prominence in

high-speed networking and high-performance computing.

- The Palmetto Cluster supercomputer has been financed

as a condominium cluster, based on the model developed at the

University of Southern California and then brought to Clemson

by CTO Jim Pepin.

see Jim Pepin, "High Performance Computing and Communication (HPCC),"

Chapter 8, in Edward Blum and Alfred Aho (eds.),

Computer Science: The Hardware, Software and Heart of It,

Springer, 2011.

Clemson University researchers who own computing nodes

within the Palmetto cluster have been remarkedly open to allowing shared

use of their nodes when otherwise idle.

-

"Clemson Makes Important Move in High Performance Computing World,"

CCIT News, June 9, 2008:

The first phase of development, completed in December 2007,

provided approximately 15 Teraflops (TF) of computing power

and moved Clemson from outside the Top 500 to within the Top 100

computing sites in the world. A second phase was implemented

last week, adding another 16 TF to the cluster, which when

fully developed could operate at up to 100 TF. "This phased

implementation is a cost-effective and prudent development of

the cluster, allowing our investments to track with user needs",

said Jim Bottum, Clemson's vice provost and chief information officer.

The Palmetto Cluster is being made possible through a strong

partnership between faculty and IT administration, with the

faculty 'owners' providing a significant percentage of the

funding for the compute nodes while the university provides

the rest of the nodes plus the infrastructure to build the cluster

(racks, switches, power, etc.). According to Clemson's chief

technology officer, Jim Pepin, the system currently benchmarks

at just over 31 TF and the efficiency using Myrinet 10 Gbps

hardware is 81 percent.

- Randy Martin remembers that the highest CPU-only benchmark rating

(prior to the inclusion of GPUs) used 1549 compute nodes and

benchmarked at 92.48 TFlops in April 2011. The cluster had a

Myrinet 10G interconnect. With the later addition of GPU compute

nodes, Mellanox 56G Infiniband interconnect was added.

Randy also remembers that Math had a large-memory node

(2TB RAM) added to Palmetto around 2011.

- In 2017, Amy Apon (Principal Investigator) received a $1M

National Science Foundation (NSF) grant

MRI 1725573 to improve Palmetto.

(Co-Principal Investigators were Jill Gemmill, Dvora Perahia,

Mashrur Chowdhury, and Kuang-Ching Wang.)

See

"Clemson Palmetto Cluster on Track for $1 Million Upgrade,"

HPCwire, October 5, 2017.

Amy Apon in front, and L-to-R behind her:

Dennis Ryans, Will Robinson, Jay Harris, K.C. Wang,

Randy Martin, Paul Wilson, Lindsay Shuller-Nickles, Robert Latour,

Jill Gemmill, Matt Saltzman, Ulf Schiller, Olga Kuksenok, and Jim Pepin.

Photo from the article.

-

NSF has also supported the purchase of

compute nodes for Palmetto through awards:

-

MRI 1228312, Jill Gemmill (Principal Investigator), 2012, $1M+;

-

II NEW 1405767, Amy Apon (Principal Investigator), 2014, $700K+;

and,

-

MRI 2018069,

Feng Luo (Principal Investigator), 2020, $651K.

- Bailey Troutman,

"High-Performance Computing Enhancements Bolster Artificial

Intelligence/Machine Learning Research,"

Clemson News, August 5, 2024.

Palmetto 2

- Note that the Palmetto 2 name has been used in TOP500

entries since

2013.

Cloud Lab

-

Marla Lockaby,

"Clemson Selected for $10M CloudLab Project,"

Upstate Business Journal, August 25, 2014.

- Dmitry Duplyakin, et al.,

"The Design and Operation of CloudLab,"

USENIX Annual Technical Conference, 2019.

- See Section 13.3, CloudLab Clemson, in the

description of CloudLab hardware.

Engineering Computer Laboratory

- The hybrid computer was purchased with funding from NSF and

the Appalachian Regional Development Act,

cited on page 12 in the

President's Report to Board of Trustees, 1969-1970.

- A grant from the Self Foundation allowed the College of Engineering

to provide dial-in service to a PDP-8 minicomputer running TSS/8, a

time-sharing operating system later marketed as the DEC EduSystem, as

part of teaching programming to students at sixteen high schools.

See Ross Cornwell,

"Boobtubes and Computers - Newest Members in Class,"

Citadel vs Clemson Football Program, September 9, 1972, pages 7-8.

Chemical Engineering

-

"Chemical Engineering Receives $200,000 Computer From Dow Chemical,"

Clemson Newsletter, vol. 8, no. 1, August 15, 1968, p. 13 (p. 220 of the pdf):

The Chemical Engineering Department received a gift of a GE 312 Computer

from the Dow Chemical Company. The computer, valued at over $200,000, will

be used for studies in direct digital control and process simulation.

-

"New Computer System,"

The Tiger, vol. 62, no. 2, August 30, 1968, page 6. [source of photo used above]

- Charles Littlejohn, Jr.,

"ChE Educator: Duane Bruley of Clemson,"

Chemical Engineering Education, vol. 4, no. 2, Spring 1970, pages 58-61:

Dr. Bruley is in charge of the department's process control

computer laboratory. This facility includes a

GE-312 digital process control computer and

peripheral equipment which was a competitive

gift awarded by Dow Chemical Co., Midland,

Mich., on the basis of a proposal submitted by

the department. The laboratory also contains a

TR-48/DES-30 analog/digital logic package

which was granted by the National Science Foundation.

Note that Bruley was also Clemson University's tennis coach.

Electrical and Computer Engineering

- CLASSIC 8: See Tom and Carolyn Drake,

"Tribute to Dr. Lyle Wilcox,"

Clemson News, October 15, 2018:

Lyle had purchased a DEC PDP-8 minicomputer which arrived in the

fall of 1966. Maurice Wolla and I were the only faculty members

with prior computer experience at Michigan State University. I

had been a member of the Engineering Computer Laboratory at

Michigan State with both software and hardware experience. The

PDP-8 became our launching point into computer engineering.

The PDP-8 was followed by a PDP-15/EAI-680 hybrid and a PDP-11.

- The equipment list appeared in the August 1975 edition of the

ECE "Graduate Study and Research" booklet, and Tom Drake sent me a

photo of the list.

- Tom Drake remembers:

The PDP-15/EAI-680 system was upgraded several times:

- DEC VT-15 Graphics Display with Light Pen

- VW-01 Writing Tablet

- MAP-300 Interfaced to DEC PDP-11/Uni-channel

- 9-Track Magnetic Tape Drive

The MAP-300 performed FFTs and was used in a speech recognition project.

The Self Foundation purchased the following:

- PDP-8 running TSS/8 with 16 Dial-in Modems

- PDP-11 running RSTS with 16 Terminals (Instruction Development Facility?)

- Add interfaces to PDP-8

...

Various research projects had minicomputer systems associated with

specialized research.

- Tom Drake also remembers some computer systems that were donated

to the ECE department but that were not put into use. Instead, they were

surplused due to lack of space and support funds:

- two RCA 301 computers donated by Southern Bell in 1971

(see

Clemson Newsletter, vol. 11, no. 7, November 15, 1971, page 14,

which is page 135 of the pdf)

- a PDP-11 system donated by Greenwood Mills

- a PDP-8 systems donated by a chemical company near Greenville

- Tom Drake does not remember a Packard Bell (later Raytheon)

TRICE digital differential analyzer that was described as loaned

from NASA in 1971.

TRICE stands for Transistorized Realtime Incremental Computer Expandable.

See

Clemson Newsletter, vol. 11, no. 7, November 15, 1971, page 14

(which is page 135 of the pdf).

- Harris 800:

Clemson Newsletter, vol. 23, no. 15, November 30, 1983, page 1

(which is page 170 of the pdf):

$329,000 Harris 800 computer system donated by the

Harris Corp. of Melbourne, Fla.

- PARL

- This 1997 web capture of the

PARL home page has links that describe the

Clemson Dedicated Cluster Parallel Computer

and Grendel. See later captures of the page for descriptions of

subsequent clusters.

- In 1999, PARL hosted the

Beowulf Underground as the earliest centralized forum for dissemination

of information on how to build and use Beowulf systems. This site

continued until 2003.

See Dan Orzech,

"Slaying costs:Linux Beowulf," Datamation, August 30, 1999,

which cites Beowulf Underground. (Note that the Beowulf Underground

domain name has been used for other purposes by a new owner

since 2021.)

- An early overview of the Minigrid is presented on the web page,

Clemson Computational Minigrid Supercomputing Facility:

A collection of Beowulf Clusters interconnected to form a

computational grid. The grid consists of 4 Beowulf clusters;

Adenine, a 64 node cluster located in the Parallel Architecture

Research Lab and used primarily for system software research;

Thymine, a 32 node cluster dedicated to the Center for the

Advanced Engineering of Films and Fibers; Guanine, a 32 node

cluster dedicated to the Clemson University Genomics Institute,

and Cytosine, a 128 node cluster shared by all the groups.

Each node in each cluster contains two 1 GHz Pentium III

processors. The clusters internal networks are connected

together through multiple trunked gigabit fiber links.

With these links, and the modifications we've made to the

Scyld Beowulf Linux Operating System running on the machines,

it is possible for us to "borrow" nodes from clusters

connected to the grid, allowing up to 512 processors to be

assigned to a single job on any of the attached clusters!

The Mingrid was funded in 2000 by

NSF Award EIA-0079734, Walt Ligon (Principal Investigator), $662K.

The expanded system is described in

"Computational Mini-Grid Research at Clemson University,"

Parallel Architecture Research Lab, November 19, 2002.

- Walt Ligon remembers:

The first cluster built by PARL was 4 DEC Alphas (mikey, donny, leo,

and raph) with switched FDDI 100mbps networking. That was later

expanded to 12 (all super heroes and their alt). These did not run

Linux, and so were technically not Beowulf. These were used by the

wireless communication group and the electromagnetics group with

assistance from PARL.

Grendel, the 3rd Beowulf (the first two being Wiglaf and Hrothgar

at NASA Goddard) was built next with switched 100mbps ethernet

(the first beowulf with switched 100mb). The switch to Goddard for

the summer and was used on Hrothgar with a sizeable improvement -

pushing for switched networking as the standard after that.

The "mini-grid" was the first large cluster on campus with 256

dual-processor nodes and switched gigabit networking. The cluster

had 4 sections connected by dedicated fiber. 3 sections were

dedicated to different groups (PARL, Genomics, CAEFF) and the

remaining 128 nodes shared. This was the basis for the condo system

that became Palmetto. Corey Ferrier learned about managing parallel

systems on this system. 512 processors total between the 4 chunks:

128 shared, 64 PARL, 32 CAEFF, 32 Genomics. This system was widely

used by ECE, ME, Math, Genomics, and CAEFF.

There were a few other project clusters:

Travelwulf - 5 POS nodes velcro'ed together. When we got to

security we disassembled it and each student carried part.

When we got to Supercomputing we re-assembled.

Aetherwulf - 16 nodes, the next generation of Travelwulf made

with SBCs in a transparent box with a case. Did NOT go on an

airplane. Both of these were used primarily as portable

demonstration systems for parallel computing and parallel I/O.

Webwulf - 8 nodes was used with a satellite information system

developed at Goddard Space Flight Center. Users could come in

via WWW, search for data using temporal, geographic, and sensor

queries, the system would kick off a parallel job to load the

data, process it with stadard algorithms, and make it available

for download. The data was captured in the lab with dishes

provided by NASA.

- WCNITC: Randy Martin believes that this was the first cluster

at Clemson University to benchmark at just over 1 TFlops.

Computer Science (later School of Computing)

- see

Robert Geist CV

- see DoD Internet Host Tables listed above in the reference section

for VAX/Sun

- Mike Westall remembers about the BladeCenter:

The server had 3 chassis each containing up to 14 blades.